Most of the talk we hear about new architectures for application environments focuses on breaking monolithic applications into smaller, discrete services that make up a distributed application – an approach often referred to as microservices. A parallel architectural shift is underway, and while it’s not enjoying the same hype as microservices, I predict it will be just as significant. I’m referring to the collapse of the tiers that handle ingress and egress traffic for applications into a single, cloud‑agnostic software layer.

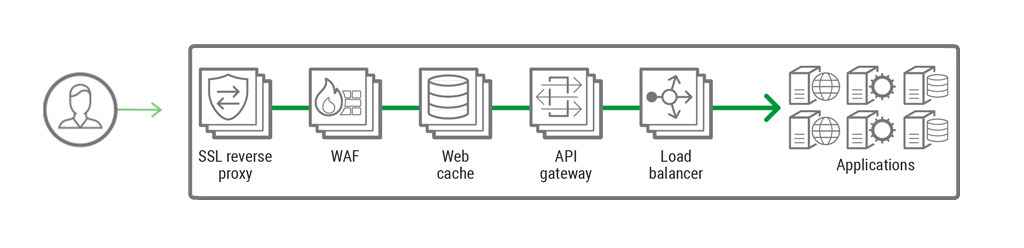

As my colleague, Owen Garrett, discussed in a recent blog post, typical ingress‑egress traffic pipelines are complex. It’s not uncommon to see five separate tiers – one each for reverse proxy, web application firewall (WAF), web cache, API gateway, and load balancer – frontending an enterprise’s mission‑critical applications. And that might not be all! At a recent meeting I had in London, a large gaming company lamented, “I wish our architecture were that simple.”

The problem with this typical approach is that traffic has to hop through numerous functional tiers before it finally reaches the applications that actually generate the result the user is requesting. Each tier represents a possible point of failure and brings with it expense, management overhead, and – most importantly – latency. Even if you’re a fan of using discrete service components in your application delivery stack, it’s often not worth the performance penalty.

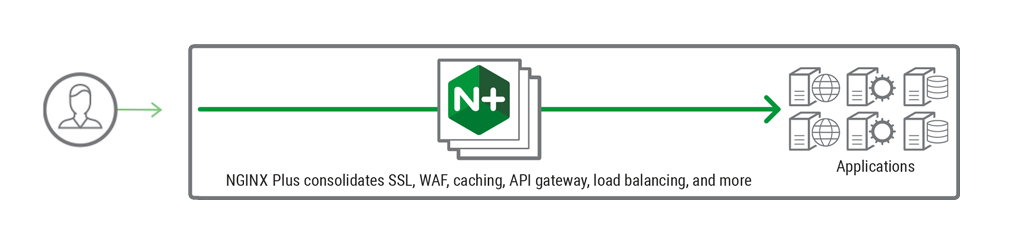

Many of our customers have told us they’re implementing a better approach: one that preserves the functionality of the ingress‑egress tiers, but in a simplified architecture with fewer hops and less overhead. We call it a dynamic application gateway.

Using NGINX to Architect A Dynamic Application Gateway

The NGINX Application Platform is a suite of application development and delivery technologies designed to help with both application frontend and backend architectures. Recent enhancements in NGINX Plus R16 introduced a new paradigm for ingress‑egress traffic where there is a single frontend tier that combines the functions of authentication, firewall, caching, and load balancing. And with clustering capabilities you can scale out this tier in a dynamic manner, which enables maximum performance and utilization. The reduction in hops improves performance dramatically, removes the multiple points of failure, and eliminates the costs of supporting tools from multiple vendors.

What Is a Dynamic Application Gateway?

If you were at NGINX Conf 2018 you heard about the concept of a dynamic application gateway. But let’s set context and provide a simple definition:

A dynamic application gateway combines application delivery technologies – including proxying, SSL termination, WAF, caching, API gateway, and load balancing – into a single, dynamic tier for north‑south traffic in and out of any application and across any cloud.

This means a dynamic application gateway:

- Acts as an intelligent control point for optimizing traffic delivery for apps and APIs

- Shares a single configuration and key‑value store across a tier of multiple instances acting as one distributed, elastic gateway

- Combines app delivery controller (ADC), API gateway, and WAF functionality

- Handles all north‑south traffic flowing in and out of your apps

- Is implemented by infrastructure teams, but designed for app and DevOps needs

- Differs from:

- Existing software load balancers because it integrates API gateway functionality

- Existing API gateway solutions because it integrates load balancing and WAF

Five Reasons You Need a Dynamic Application Gateway

What’s inspired this new approach to delivering applications? Five things:

- Shifting of infrastructure closer to apps – Thanks to Infrastructure as Code, app and DevOps teams can provision (and often program) their own infrastructure. Now application teams can shift ingress‑egress functions into the developer’s application stack.

- Changing user requirements – Customers expect their websites and mobile apps to just work. Yet, businesses – particularly retailers – face fluctuating traffic. Dynamically scaling ingress‑egress functions guarantees a consistent, compelling user experience.

- Evolving cloud capabilities – Public clouds require a different approach to handling ingress‑egress traffic. You can’t take your hardware with you to the cloud. Also, elastic computing resources in the cloud make it possible to scale based on fluctuating traffic.

- Maturing software‑defined networks – Software‑defined networking has focused on the routing and switching layers. But in the cloud these functions are mostly abstracted. Now, the focal point is Layer 4–7 networking software with fine‑grained traffic control.

- Emerging security threats – New attack vectors like DDoS require more “cushion” on the frontend. Web app firewalls have evolved to detect threats, but mitigation needs to occur dynamically at the ingress‑egress tier to respond to the ever‑changing threatscape.

Given all these trends, now is the time to review the architecture for your ingress‑egress traffic pipeline. Building a dynamic application gateway that combines delivery technologies like proxying, WAF, caching, API gateway, and load balancing ensures you can respond to changing needs, simplify your architecture, and pave a path to more dynamic backend application architectures like microservices.

Get started with a free 30-day trial of NGINX Plus today or contact us to discuss your use cases. The trial experience combines NGINX Plus with NGINX ModSecurity WAF to provide a single, dynamic application ingress‑egress solution.

[Editor – NGINX ModSecurity WAF officially went End-of-Sale as of April 1, 2022 and is transitioning to End-of-Life effective March 31, 2024. Trials of NGINX Plus now include NGINX App Protect WAF and DoS instead. For more details, see F5 NGINX ModSecurity WAF Is Transitioning to End-of-Life on our blog.]